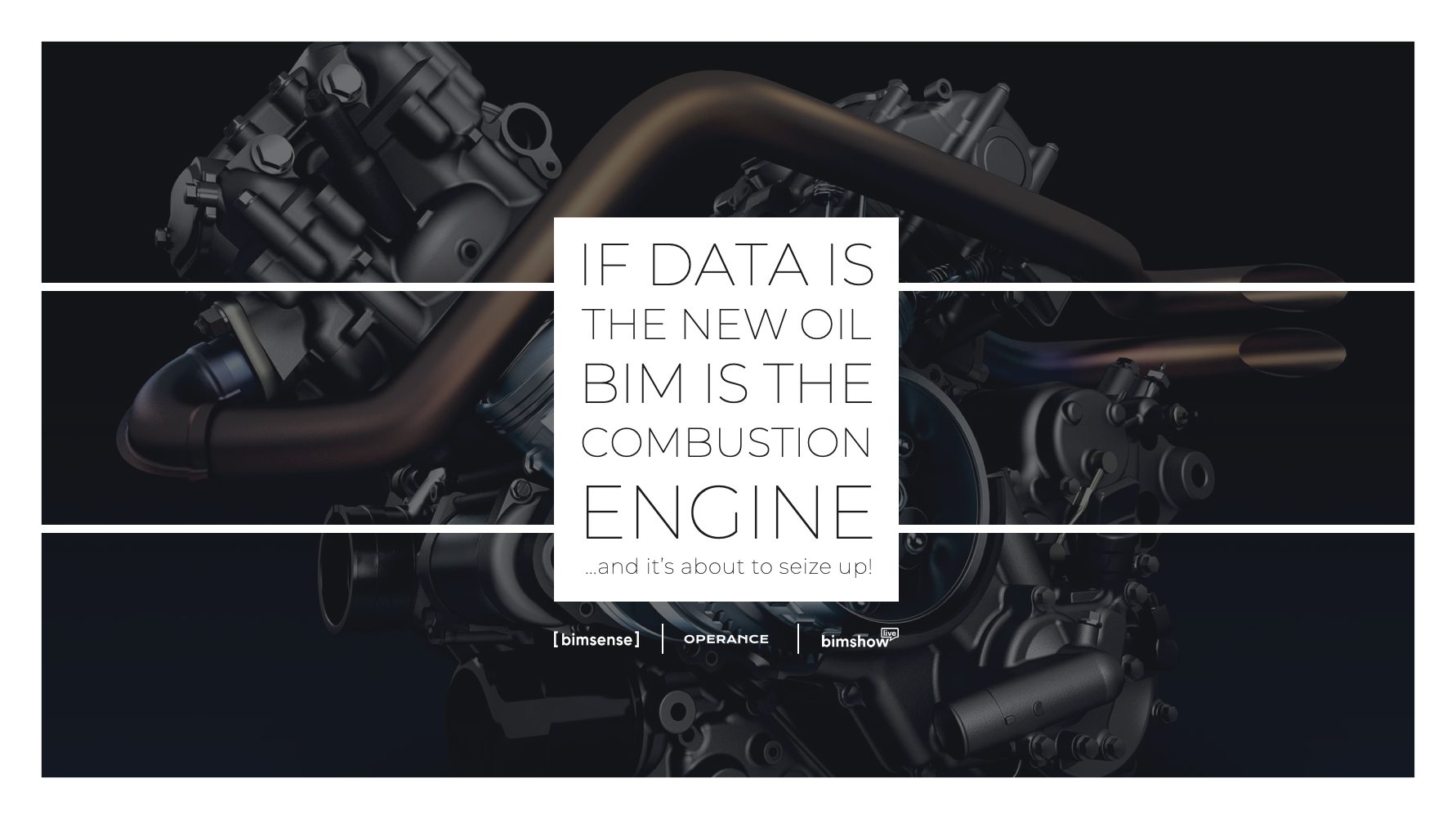

Bimshow Live 2020 Presentation

If Data Is The New Oil, Then BIM Is the Combustion Engine…and it’s about to seize up!

How Data Science, Artificial Intelligence and Machine Learning can enable quality BIM.

In this article, Ian Yeo kindly shares his Bimshow Live 2020 script on how Data Science, Artifical Intelligence (AI), Machine Learning and Deep Learning can enable quality BIM.

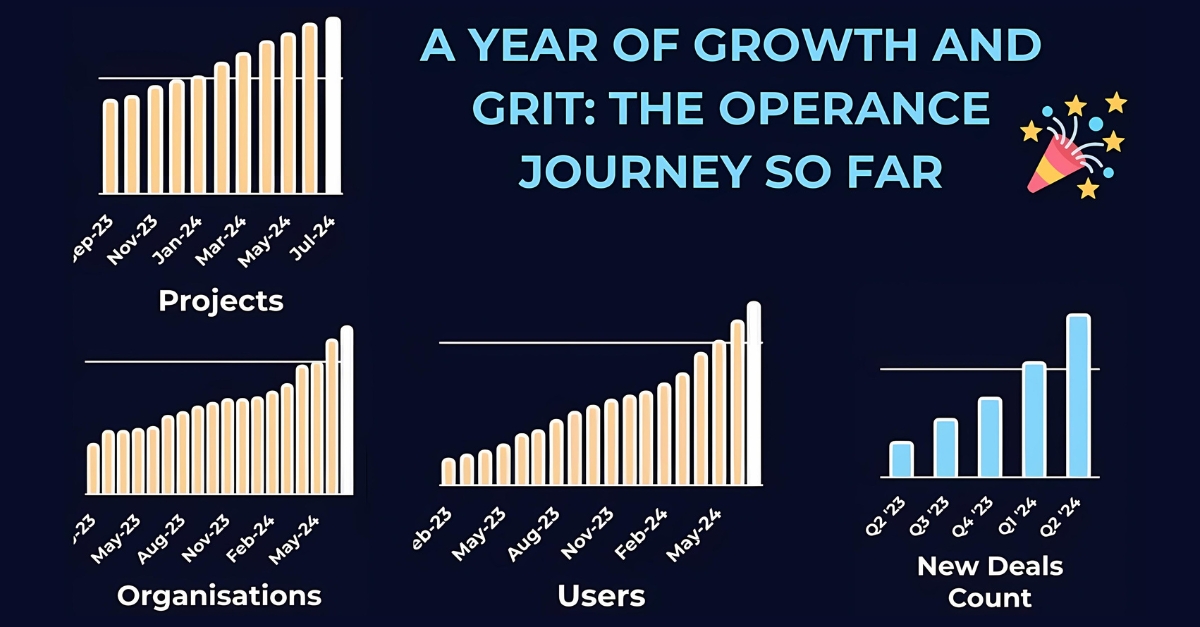

Ian provides real in-depth insight into how he uses coding and data science tools, along with a link to the presentation itself via Slideshare and numerous links to the various resorces and platforms we use at both Operance and Bimsense to create, collate, and provide quality BIM data for the benefit of Operance users.

Reccomended Tools

Global Data

Data and information has always been a big part of our lives, but the ease in generating new information and at which we can access this information is making data ever more important (and in many cases essential);

- Data is or should be driving decision making in business and government.

- The amount of data we are producing has never been greater than it is now.

- The forecast for the volume of data that we are due to create this year is 50.5 zetabytes (from statista.com), and one zetabyte is 1021 bytes or a 1 with 21 zeros after it. Or 1 trillion Gigabytes.

- The 50.5 zetabytes from this year alone, is equivalent to all the data that we ever produced before 2016.

- This all means that the digital universe has doubled every 2 ½ years. It’s predicted that in the future the doubling of data will reduce to every 2 years, so in 2022 we will generate over 100 zetabytes (100 trillion gigabytes).

- This doubling of data is giving us exponential growth.

This means that if we want to take control of data, there is never a better time to do it and taking control will enable you to gain from the available benefits.

BIM Data

So how does the exponential growth in the data universe directly impact upon us our work and BIM? Firstly our everyday work and our processes are becoming increasingly more digital and increasingly online by using new tools.

As an industry we are finding and generating new sources of information, for example in a relatively short time we have spawned a whole new industry of scanning and modelling existing buildings, which is all based upon gathering millions of geometric data points.

BIM clients want increasingly more data than ever in onerous EIRs. Long gone are the days when the physical building and a couple of ring binders of paperwork were enough to complete a project, we now also handover a whole suite of digital information, often including a digital version (or model representation) of the completed building.

If you carry out repetetive workflows that can be defined in clear steps then it’s almost certain that you can automate it.

Coding can be really beneficial. Coding, when you break it down is just a process of providing a clear sequence of instructions. The instructions are provided in a predefined format and that format is the language. I believe that coding and just simple coding when combined with your expertise can be your superpower!

IFC

It’s common now for EIR’s to require KPI reporting on either model graphical quality or data quality. As an example, an EIR requires that a model uses uniclass classifications and requires a percentage of the completeness of objects and spaces with classifications.

On the surface this appears to be an easy problem, but classifications mean different things to different people and to the application that generated the IFC file, it can be a classification in the strict IFC format. Or what about classifications from the the revit COBie plugin or some other bespoke approach? If an EIR isn’t explicit then it could be that all of those are acceptable.

We wanted our solution to be repeatable and automated, so we use Jupyter Notebooks with Python and Pandas;

- We produce a Pandas data frame from an IFC file (this does require some adjustments, to make it work, but again, once you have this setup this one process can be reused for all other IFC file work).

- Then, by a series of Pandas filters we obtain a list and a count of the number of model objects.

- We then look at objects that have IFC Uniclass classifications and combine this with objects that have classifications through a property or attribute (these steps are not simple, it’s not one to jump into as a trial project) and if a designer uses a different method for classification we just add a couple more rules or instructions (and over time we have a robust method of that covers most method for classifying objects).

We have an approach where we want to be as flexible as possible, we think there are project benefits in letting teams use the efficient processes that they have evolved rather than change, because we want data in a certain way or format.

It’s actually far easier to setup and run a script than to change a bespoke data type or format into a client or end-user-specific format that is provided, that the data being generated using tried and tested techniques is consistent, and reliable. We use this approach with the BIM data for Operance, afterall, why would we want to exclude models, just because they don’t follow a precise (but arbitrary) format?

What this means is that we have an automated approach where we link to a model, run the script and receive an output of the progress of the classifications. Which goes into a report which again is automated. But as this method uses keyword searches to obtain classifications as properties or attributes, it’s also extendable for obtaining other data such as manufacturer. And then extendable again to search for data for say maintainable assets which have a predefined classifications.

COBie

There are so many automations that we have that involve COBie, most using pandas within a Jupyter notebook where each sheet of a COBie file is imported as a separate data frame. Some of the automated checks are simple such as checking for and removing duplicates, adding documents for asset types and checking for the correct data formats. One that is slightly more complex involves manufacturers and obtaining contact information.

We take a manufacturer name and use various automated searches to find contact information for each manufacturer, their address, a standard contact email and telephone number. This uses a couple of APIs (to find a business address and telephone number), a bit of web crawling (for a standard contact email address), various checks to validate the data, combining all this information together and then saving it back into a COBie file contact sheet.

This doesn’t always produce the correct information, I would say on average 1 in 20 contacts have to be amended, but that means that the other 19 are fully automated. This includes adding the correct manufacturer contact email address to the individual assets types.

We also have also automated the generation of the COBie documents for maintainable assets, all we ask is that the file name that we receive from the contractors has a reference to the asset model number. The script then looks into the folder with the documents and populates the cobie.documents sheet. With other simple scripts for zones and systems.

The more that we use these automations the more valuable they become, every time we are increasing our return on investment.

BCF

We use Solibri as our preferred tool for model checking, from which we export the checks as BCF issues, which allows the project team, with the BIMcollab plugin) to view them in Revit or Archicad. We also provide an Excel export for those that only wish to view a summary of the checks. This is a really useful workflow and an effective way to collaborate.

But, how often do you find that this happens? Say the models just don’t federate correctly (there are various reasons why this doesn’t happen and I’ll not get into the ins and outs of federation) and that the best solution is for the models to always federate correctly. What I will explain is how we manipulate the BCF issues to work collaboratively even if we have different elevations. We want to find solutions to keep the team doing what they are good at.

The different model heights can be solved by establishing the offset error and adjusting the location in Solibri. We can then do the model check and generate all the BCF issues.

The problem comes when the designer with the incorrect model height attempts to view the BCF issues in their model. In the example the models were adjusted by 81.507m which means that the BIMcollab BCF will try to view the issue 81.507m away from the position of the model.

Now BCF issues are just just text files inside various folders within a zip file, and given a .bcf file extension rather than .zip. So with a jupyter notebook running python (and a couple of useful python packages) we have written rules to unzip the bcf file, list and open each file, read the content and then adjust all elevations references by the 81.507m. The content is then saved and repacked into a zip file

All of this is done within a saved jupyter notebook and after linking to the bcf file and providing the height adjustment it’s all fully automated. Of course, once we have this setup it can be extended, to add other useful data to the BCF, for example we have added revit BATIDs of the objects for designers who are not permitted to use the BIMcollab plugin, to enable them to view the objects involved in the issue.

All of those automations that we looked at (and of course all the automations that you are now going to develop and use) are amazing, they enable us to work more efficiently, they will help you to free up time for the creative work that we as humans, rather than machines, are uniquely placed do.

But all of these automations only enable us to work better, more efficiently and more reliably with our data. Essentially, doing more of the same.

The next step on the data ladder is to use all this data to gain insights and to inform decision making. For example, how do we assess whether a design is optimised for well being, or for space utilisation or CO2 emissions or how can we assess the likelihood of whether a project will finish on time and within budget.

For answers to questions like these where the number of different factors (or variables) are enormous and their interactions are too complex for us to understand the normal tools available to us are just not good enough.

Machine Learning

What we need to do is to use AI and in particular Machine Learning (ML) and a certain type of machine learning known as Deep Learning (DL). This involves us gathering the increasingly large amounts of data and analysing it using state of the art techniques. Deep learning has within the space of 3 or 4 years transformed the accuracy, reliability and quality of AI.

I’m aware that the idea of AI for most people is either something very scary that’s going to take over our lives on route to the apocalypse or is something that has benefits but is only accessible by the tech giants (the google, apple, facebooks and amazons). Neither of those views are correct.

On the first point, machine learning just aggregates lots of data to develop a machine learning model. Once a model has been produced, it takes an input and provides a result. The result is purely what the model assesses as the most likely result. And the most likely result is assessed using all the previous examples which used to make up the machine learning model, what it learnt from. Now I’m quite certain that that deep learning, which is by far the best AI technique that we have isn’t going to result in AI apocalypse, at least not anytime soon.

On the second point AI and machine learning is only for the tech giants. The tech giants are undoubtedly using and benefiting from AI. But the principles of machine learning were developed in academia, this means that it is available to all of us.

And better still there are machine learning tools available to us all. And guess what, most of them can be used in a Jupyter Notebook, using Python, a Pandas dataframe and open source machine learning packages.

Doing Artificial Intelligence

Before we finish here are a couple of examples that we have. The examples are in their early stages of implementation, but they have got to an acceptable level of accuracy for our internal use.

Building on our ability to assess model objects for Uniclass classifications, we have a machine learning model that automatically applies a classification for objects.

It assesses either an architectural or building services model and then takes data about the object such as IFC type, the object dimensions, the location relative to floor level, the name of the object and 10 other variables. And provides the most likely Uniclass product classification for the object. And it does this using a combination of what is called natural language processing for the object name and a tabular model for the remaining data.

We are using this internally, when we receive a client model for use within Operance which has no or very few classifications. But in theory this could be extended through plugins to directly apply classifications within Revit.

And then finally we have our Operance AI Object Detection where you can take a photo of a building asset, such as a door or a light fitting or a boiler and assess what it is from 130 defined Uniclass products. Go ahead, have a go yourself!

This uses a groundbreaking technique called transfer learning. Transfer learning builds directly on top of some of the largest available data sets (in this case imagenet which has some 14 million images in more than 20,000 categories.) and then adds our new data which for the initial learning uses around 100 images for each of the 130 building asset categories. During training and validation or the model we get an accuracy of around 86%. Which a few years ago would have been viewed as a cutting edge.

To give you an idea of how good this is, a highly cited academic paper from 2016 achieved an accuracy of 85% on just 20 different categories and these were very broad and different categories such as boat, bird and bike. Rather than the quite narrow and similar building assets such as a consumer unit compared to a distribution board and external louvre and an internal ventilation grille.

And this technology is accessible to all of us. Consider how object detection could be integrated into an automated assessment of progress, or assist with labelling site photographs or recording evidence of completed work.

As an industry we are contributing to the exponential increase in data and we we need to use our data more efficiently, but more importantly we need harness the data to gain not just incremental improvements but the step changes that this data can enable

If you want to find out more in your own time, we have provided a webpage where you can find out more and start your journey to turbo charge your BIM engine. This includes some of the best available learning resources (many of which are free) starting from learning the basics at code academy through to learning the cutting edge deep learning techniques from fastai. Plus links to enable you to install all the software.

Imagine if each of you could combine your unique expertise with the power of deep learning, and this expertise is available to other experts, in no time at all we will have something far more valuable than the sum of the parts, something that will enable us to make real changes to real people.

Thank you for reading this article, my email address is ian.yeo@bimsense.co.uk, I welcome your conversation!

If Data is the New Oil, BIM is the Combustion Engine: Slideshare

[/vc_column_text][/vc_column][vc_column width=”1/6″][/vc_column][/vc_row][vc_row full_width=”stretch_row” css=”.vc_custom_1582656145705{margin-top: 0px !important;margin-bottom: 0px !important;border-top-width: 0px !important;border-bottom-width: 0px !important;padding-top: 0px !important;padding-bottom: 20px !important;}” el_id=”Links”][vc_column width=”1/6″][vc_empty_space][/vc_column][vc_column width=”2/3″][evlt_heading main_heading_text=”Further reading” main_heading_font_size=”18px” main_heading_color=”#26292c” main_heading_css=”font-weight:700 !important;line-height:37px !important;” css=”.vc_custom_1582195822893{margin-top: 0px !important;margin-bottom: 0px !important;border-top-width: 0px !important;border-bottom-width: 0px !important;padding-top: 0px !important;padding-bottom: 0px !important;}”][vc_column_text css=”.vc_custom_1582651810390{margin-top: 0px !important;margin-bottom: 0px !important;border-top-width: 0px !important;border-bottom-width: 0px !important;padding-top: 0px !important;padding-bottom: 0px !important;}”]

- How to automate the boring stuff: Automate the Boring Stuff

- Learn to code for free: Codecademy (for some really good introductory lessons to python, that require no setup and can run in your browser)

- Learn to code, gain a new skill: Treehouse (another good learning resource with some free courses)

- The world’s largest web developer site: W3schools (provides a reference for most common python commands plus other languages).

- Open-source software, standards, and services: Jupyter (the easiest way to get up and running with jupyter notebooks is to use Anaconda).

- A couple of good starter guides for jupyter notebooks, including how to install: https://realpython.com/jupyter-notebook-introduction/ and https://www.dataquest.io/blog/jupyter-notebook-tutorial/.

- Online community to learn and share knowledge: Stackoverflow (the no.1 place for answers!).

- The world’s most popular data science platform: Anconda (provides a graphical interface for installing and accessing jupyter notebooks on both windows and macOS).

- Improve your Python skills and deep learning applications: Google Collab (as an alternative to running jupyter notebooks directly on your laptop, use this to get a notebook located in the cloud, up and running in a matter of seconds. Although, this does have the disadvantage of making it harder to access your local file and data).

- The open source deep learning framework from facebook PyTorch https://pytorch.org/ , we don’t directly use PyTorch but instead use the FastAI version https://docs.fast.ai/ which provides a simpler way to access the package

NEWSLETTER

Revolution Is Coming

Subscribe to our newsletter so we can tell you all about it.

You can unsubscribe at any time and we don’t spam you.